31-40 of 278 results

-

GA Airport funding strategies

PI Massoud Bazargan

The purpose of this study was to investigate the current financial environment of publicly owned and operated general aviation airports, and to develop an outlook for future potential.

The study focused on basic airport demographic data and the views of airport managers of GA airports regarding their facility's current financial situation, access to finding resources, state, local, and private sector, current fuel handling activity, T-hangar vacancies, other concepts for enhancing revenue, and attitudes toward attaining financial self-sufficiency.Categories: Faculty-Staff

-

Integration of Small Aircraft Transportation Systems (SATS) into General Aviation

PI Massoud Bazargan

Conducted operational and simulation approaches to identify potential bottlenecks and examine future expansion strategies for airports by integrating SATS with GA.

Categories: Faculty-Staff

-

LAX Tow Tugs Feasibility Study

PI Massoud Bazargan

Currently, United Airlines does not have any tow tugs at LAX and uses only tractors with pull bars.

These are not suited for the lengthy tows. Due to high cost of these tow tugs a study is needed to identify economic viability of purchasing these tow tugs. In particular, the airline would like to identify metrics such NPV, payback, and IRR determined for a period of 5 years after buying these tow tugs.Categories: Faculty-Staff

-

Manpower Planning for Maintenance Crew at SFO

PI Massoud Bazargan

The objective of this project is to optimize the use of maintenance manpower at San Francisco International Airport for efficient use of available resources and reduce flight delays.

The project uses simulation for one full day of an airline's operations. The simulation model developed can identify the number of delays as well as the total time of delays that may occur throughout the system due to shortage of maintenance workforceCategories: Faculty-Staff

-

NextGen Task G 4D FMS TBO Demonstration Benefits Analyses

PI Massoud Bazargan

The goal of the project was to leverage existing technology and Flight Management System (FMS) capabilities as a starting point to define standards and requirements for trajectory exchange, time of arrival control, and other building blocks.

The analyses focuses on benefits of these standards and requirements.Categories: Faculty-Staff

-

Statistical Analysis for General Aviation Accidents

PI Massoud Bazargan

The identification of causal factors for problems within a complex system present a variety of challenges to the investigator.

This project will proceed by considering existing data on GA accidents, applying data mining methods to highlight patterns, applying mathematical and statistical methods to model relationships, and finally to employ simulation to test, refine and verify results.Categories: Faculty-Staff

-

Tow Tug Simulation Feasibility Study

PI Massoud Bazargan

In this study we conduct a feasibility study using simulation for AirTran Airways at their hub in Atlanta Hartsfield-Jackson International Airport (ATL). This study pertains to using super-tug to transport aircraft to and from the airline's maintenance facility.

The purchasing price for these super-tugs is around a quarter of a million dollars. This study attempts to investigate the possibility of reducing costs through saving jet fuel. This study adopts simulation to analyze the annual savings by studying the numbers needed, as well as the utilization and operation cost for these super-tugs. The results are very interesting, enabling the airline to clearly evaluate the cost and benefits of purchasing new super-tugs.Categories: Faculty-Staff

-

The Rise and Fall of the Veterans' Airlines

PI Alan Bender

This is an investigation into a practically unknown chapter in U.S. airline history: the advent of a brand new breed of airlines in the aftermath of World War II, mom-and-pop discounters immensely popular with the general public but very threatening to the established airlines and to the federal regulatory system.

Postwar America was a land full of opportunity. Economically and socially, in both industry and education, it was the dawn of a new era. But such was not the case in the U.S. airline industry. New technology meant faster, bigger, safer, more comfortable aircraft, yet traveling by air remained unaffordable to the vast majority of Americans. This is the story of opportunity lost due to rampant government protectionism and powerful vested interests. Utilizing historical materials from the National Air and Space Museum, National Archives, Library of Congress, four presidential libraries, and various oral history collections, a book is being prepared and written that documents the history of these long forgotten - yet historically very significant - airline companies that truly pioneered affordable airline transportation in America.Categories: Faculty-Staff

-

Discontinuity-driven mesh adaptation method for hyperbolic conservation laws

PI Mihhail Berezovski

The proposed project is aimed at developing a highly accurate, efficient, and robust one-dimensional adaptive-mesh computational method for simulation of the propagation of discontinuities in solids. The principal part of this research is focused on the development of a new mesh adaptation technique and an accurate discontinuity tracking algorithm that will enhance the accuracy and efficiency of computations. The main idea is to combine the flexibility afforded by a dynamically moving mesh with the increased accuracy and efficiency of a discontinuity tracking algorithm, while preserving the stability of the scheme.

Key features of the proposed method are accuracy and stability, which will be ensured by the ability of the adaptive technique to preserve the modified mesh as close to the original fixed one as possible. To achieve this goal, a special monitor function is introduced along with an accurate grid reallocation technique. The resulting method, while based on the thermodynamically consistent numerical algorithm for wave and front propagation formulated in terms of excess quantities, incorporates special numerical techniques for an accurate and efficient interface tracking, and a dynamic grid reconstruction function. The numerical results using this method will be compared with results of phase-transition front propagation in solids and densification front propagation in metal foam obtained by applying the fixed-mesh method be used to justify the effectiveness and correctness of the proposed framework. This project will contribute significantly towards the development of corresponding methods in higher dimensions including dynamic crack propagation problems. Development of modern high-resolution finite-volume methods for propagation of discontinuities in solids, as well as of supplementary techniques, is essential for a broad class of problems arising in today's science. The broader impact of this project also includes educational purposes. The method used in this project will be incorporated into future projects for computational mathematics major students who will gain an experience in the state-of-the-art computational science.Categories: Faculty-Staff

-

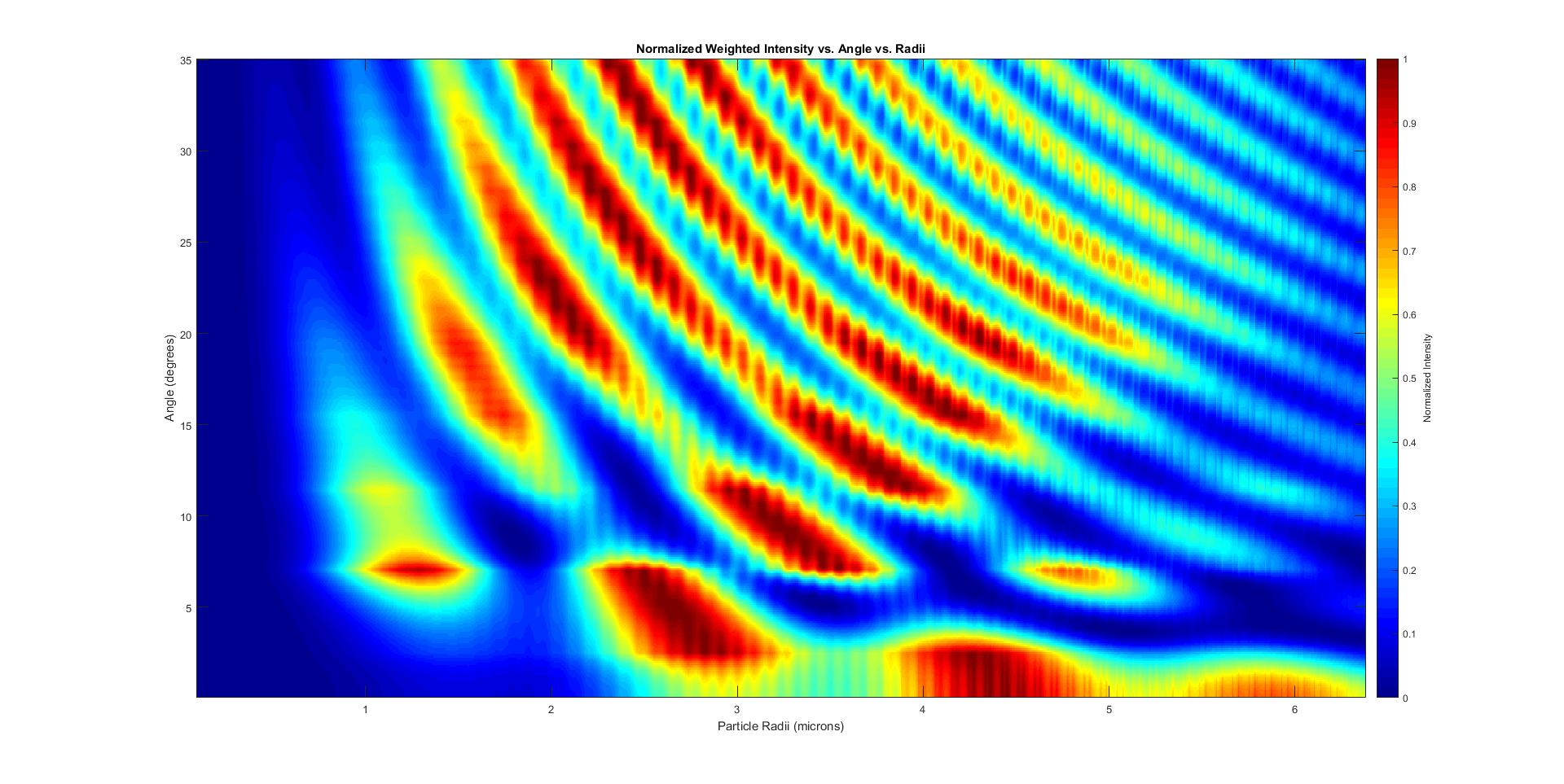

2017 PICMath: Mie Scattering Diagnostic

PI Mihhail Berezovski

CO-I Clayton Birchenough

CO-I Christopher Swinford

CO-I Tilden Roberson

CO-I Sophie Jorgensen

The Signal Processing and Applied Mathematics Research Group at the Nevada National Security Site teamed up with Embry-Riddle Aeronautical University (ERAU) to collaborate on a research project under the framework of PIC math program with challenge to make a recommendation about whether to use a technique, used in the air quality industry, called Mie scattering, and repurpose this method to measure particle sizes that are emitted from a metal surface when it's shocked by explosives.

Support for this project is provided by MAA PIC Math (Preparation for Industrial Careers in Mathematics) Program funded by the National Science Foundation (NSF grant DMS-1345499).

The Signal Processing and Applied Mathematics Research Group at the Nevada National Security Site (NNSS) teamed up with Embry-Riddle Aeronautical University (ERAU) to collaborate on a research project under the framework of MAA PIC math program with challenge to make a recommendation about whether to use a technique, used in the air quality industry, called Mie scattering, and repurpose this method to measure particle sizes that are emitted from a metal surface when it's shocked by explosives.

Using simulated data derived from Mie scattering theory and existing codes provided by NNSS students validated the simulated measurement system. The construction data procedure was implemented with an additional choice of discretization technique: randomly distributed particle radii and incrementally discretized particle radii. The critical regions of sensors position were determined.

Support for this project is provided by MAA PIC Math (Preparation for Industrial Careers in Mathematics) Program funded by the National Science Foundation (NSF grant DMS-1345499).

Major outcomes:

- Team presented results at 2017 MathFest as poster presentation

- Results were presented at ERAU campus show case

- Results were presented at 2018 ERAU Discovery Day

- Clayton Birchenough got internship with Nevada National Security Site for Summer 2017 and Summer 2018

Results were published in:

Kasey Bray, Clayton Birchenough, Marylesa Howard, and Aaron Luttman. (2017) Mie scattering analysis, National Security Technologies, LLC internal report. - Tilden Roberson got CO-OP with NASA's Armstrong Flight Research Center for fall 2017

- Tilden Roberson is currently pursuing his Master Degree at ERAU

- Joao Rocha Belmonte got internship in Germany with MTU Aero Engines for fall 2017

- Joao Rocha Belmonte is currently pursuing his Master Degree at ERAU

- Clayton Birchenough won 2nd place for poster presentation at 2018 ERAU Discovery Day

Categories: Undergraduate

31-40 of 278 results